ChatGPT local deployment and construction

posted on 2023-06-06 12:57 read(555) comment(0) like(21) collect(4)

This article briefly describes the detailed tutorial of the local deployment of the Tsinghua open source project ChatGLM. Tsinghua's open source project ChatGLM-6B has released an open source version. This project can be directly deployed on a local computer for testing, and you can experience the fun of chatting with AI without networking.

Project address: GitHub - THUDM/ChatGLM-6B: ChatGLM-6B: Open Source Bilingual Dialogue Language Model | An Open Bilingual Dialogue Language Model

Official website introduction:

ChatGLM-6B is an open-source, Chinese-English bilingual dialogue language model based on the General Language Model (GLM) architecture with 6.2 billion parameters. Combined with model quantization technology, users can deploy locally on consumer-grade graphics cards (only 6GB of video memory is required at the INT4 quantization level). ChatGLM-6B uses technology similar to ChatGPT, optimized for Chinese Q&A and dialogue. After about 1T identifiers of Chinese and English bilingual training, supplemented by supervision and fine-tuning, feedback self-help, human feedback reinforcement learning and other technologies, ChatGLM-6B with 6.2 billion parameters has been able to generate answers that are quite in line with human preferences. For more information, please refer to our blog .

In order to facilitate downstream developers to customize models for their own application scenarios, we have also implemented an efficient parameter fine-tuning method (use guide) based on P-Tuning v2 . Under the INT4 quantization level, only 7GB of video memory is required to start fine-tuning.

However, due to the small size of ChatGLM-6B, it is currently known to have considerable limitations , such as factual/mathematical logic errors, possible generation of harmful/biased content, weak contextual ability, self-awareness confusion, and Generate content that completely contradicts Chinese instructions for English instructions. Please understand these issues before use to avoid misunderstandings. A larger ChatGLM based on the 130 billion parameter GLM-130B is under development in internal testing.

The first step is to install Python locally

This step is temporarily omitted, you can download and install the Python environment yourself.

Python download address: Download Python | Python.org

Note: Install version >9 and above, it is recommended to install version 10.

The second step is to download the project package

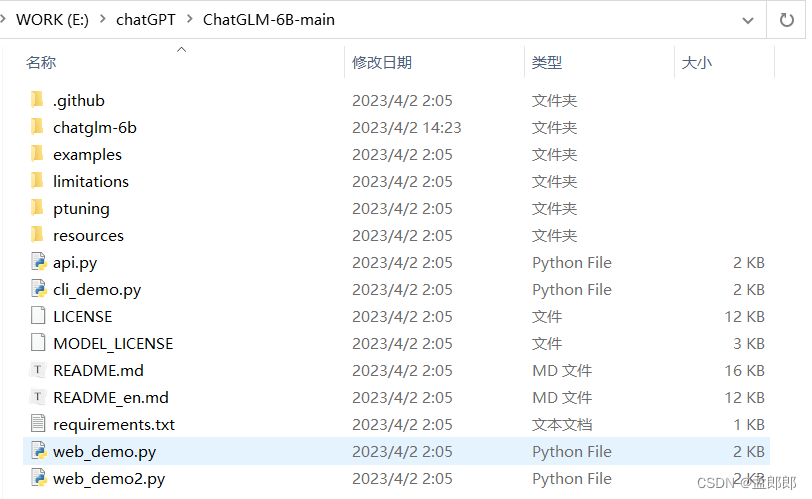

See the project address above for the address, download it directly and unzip it. I unzip it here to E:\chatGPT\.

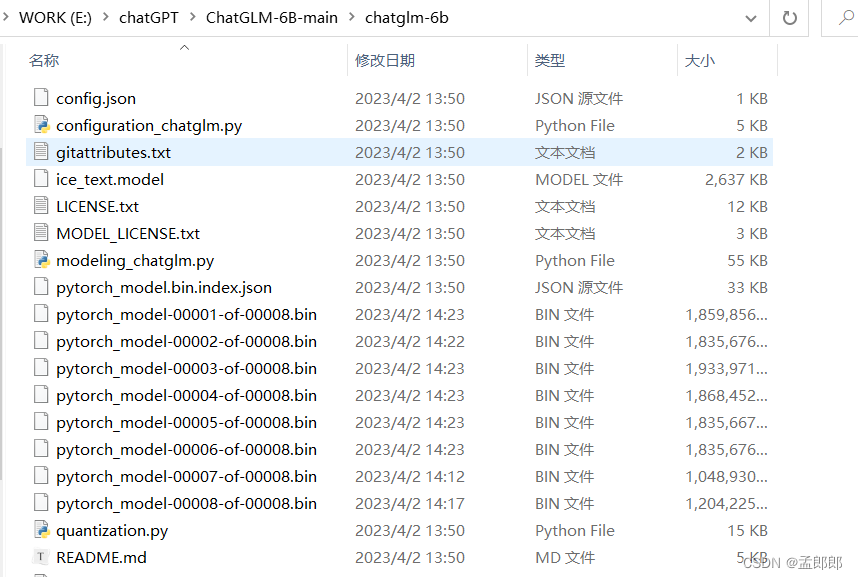

The third step is to download the model package chatglm

Download address: https://huggingface.co/THUDM/chatglm-6b/tree/main

Official website introduction:

ChatGLM-6B is an open source dialogue language model that supports Chinese-English bilingual question-answering. It is based on the General Language Model (GLM) architecture and has 6.2 billion parameters. Combined with model quantization technology, users can deploy locally on consumer-grade graphics cards (only 6GB of video memory is required at the INT4 quantization level). ChatGLM-6B uses the same technology as ChatGLM , optimized for Chinese Q&A and dialogue. After about 1T identifiers of Chinese-English bilingual training, supplemented by supervision and fine-tuning, feedback self-help, human feedback reinforcement learning and other technologies, ChatGLM-6B with 6.2 billion parameters has been able to generate answers that are quite in line with human preferences.

Note: After downloading, put it under the second-step program package, and create the directory chatglm-6b by yourself , as follows:

The fourth step is to download the dependency package

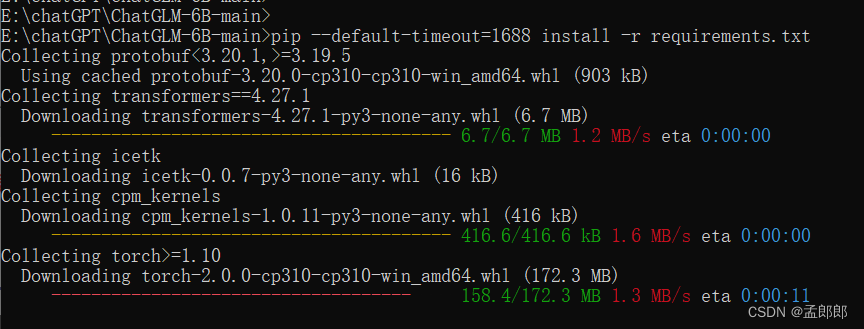

Window + R shortcut key to open the running window, enter cmd to open the console command line, and enter the program directory.

Execute the following two commands respectively:

pip install -r requirements.txt

pip install built

Note: If an error is reported during execution, please refer to the error handling at the end of the article.

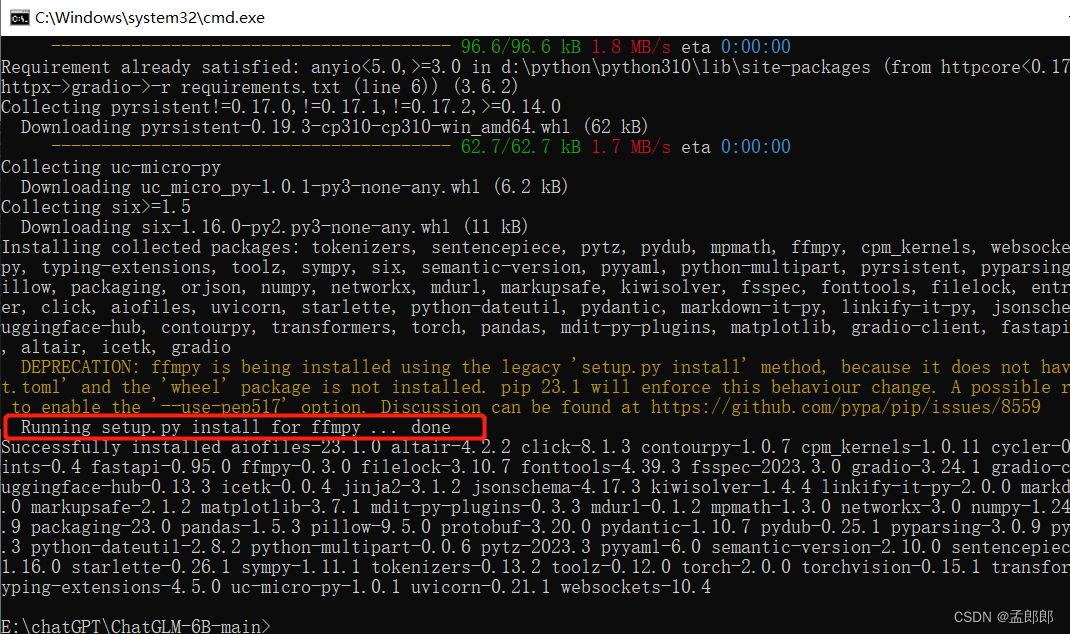

Wait for the dependent package to download successfully, the result is as follows:

The fifth step, run the web version demo

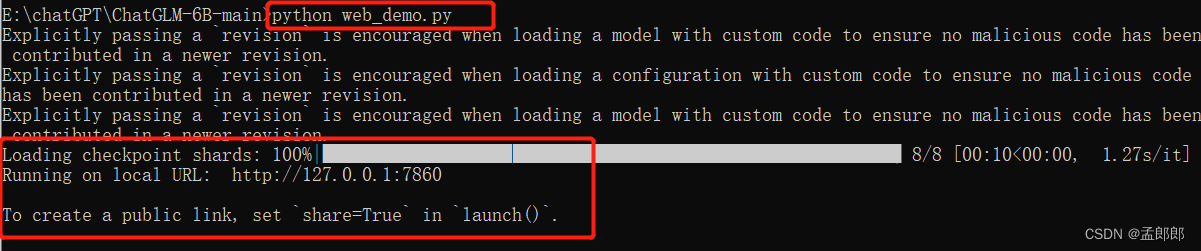

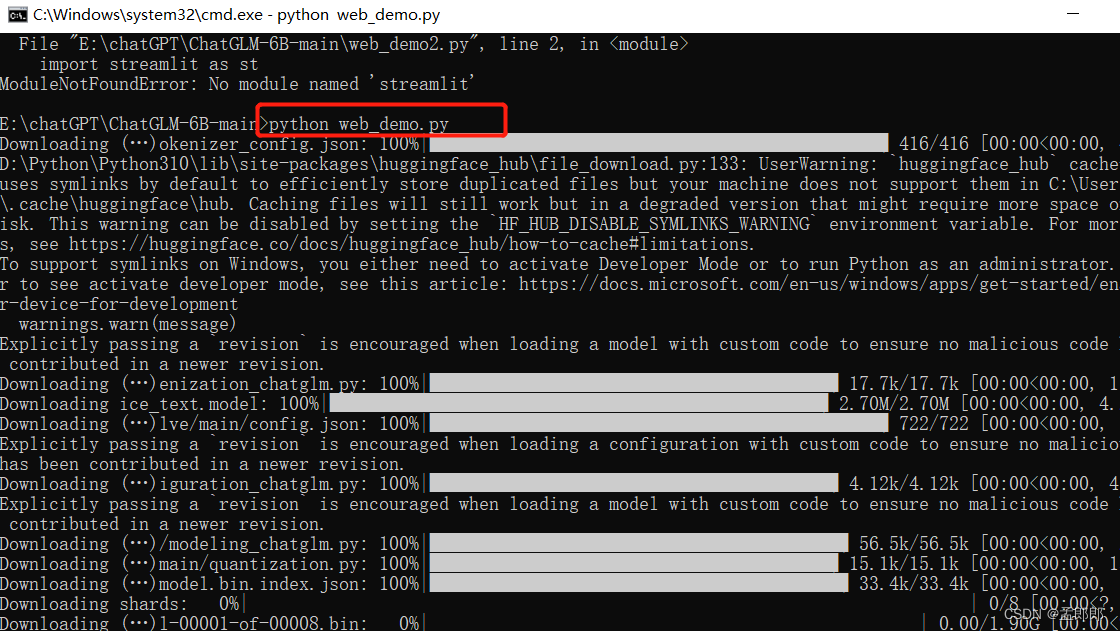

Execute the following command to run the demo of the web version, as follows:

python web_demo.py

The program will run a Web Server and output the address. Open the output address in a browser to use it. The latest version of Demo realizes the effect of a typewriter, and the speed experience is greatly improved. Note that due to the slow network access of Gradio in China, demo.queue().launch(share=True, inbrowser=True) all networks will be forwarded by the Gradio server when enabled, resulting in a significant decline in the typewriter experience. Now the default startup method has been changed. share=FalseIf there is a need for public network access, it can be modified to share=True start again.

The execution results are as follows:

Note: If the execution prompt information does not match the above picture, please refer to the error handling at the end of the article.

The seventh step is to test the web version program

Open the address in the browser and visit, enter the question, and you can see that ChatGLM will give a reply.

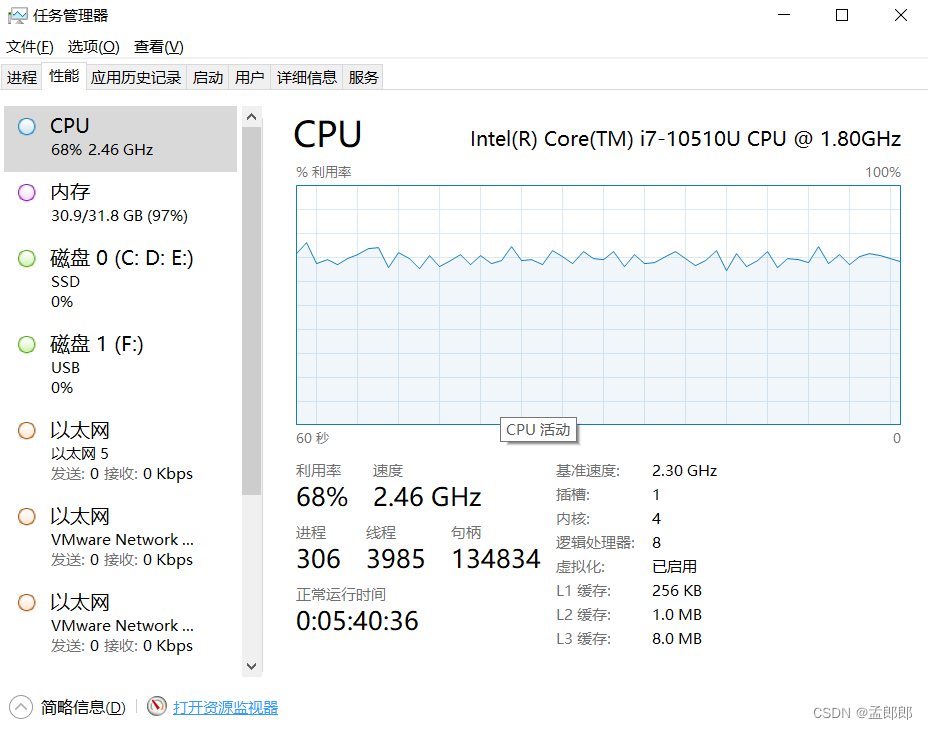

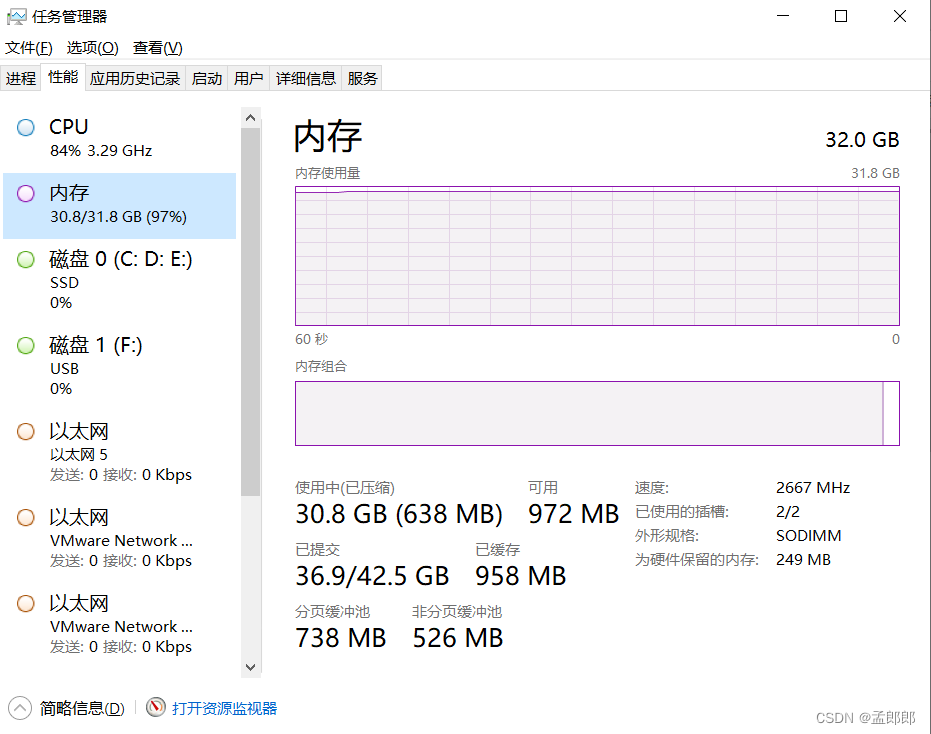

Very Good! Checking the performance of the computer, I feel that the CPU and memory are going to burst ^ ^

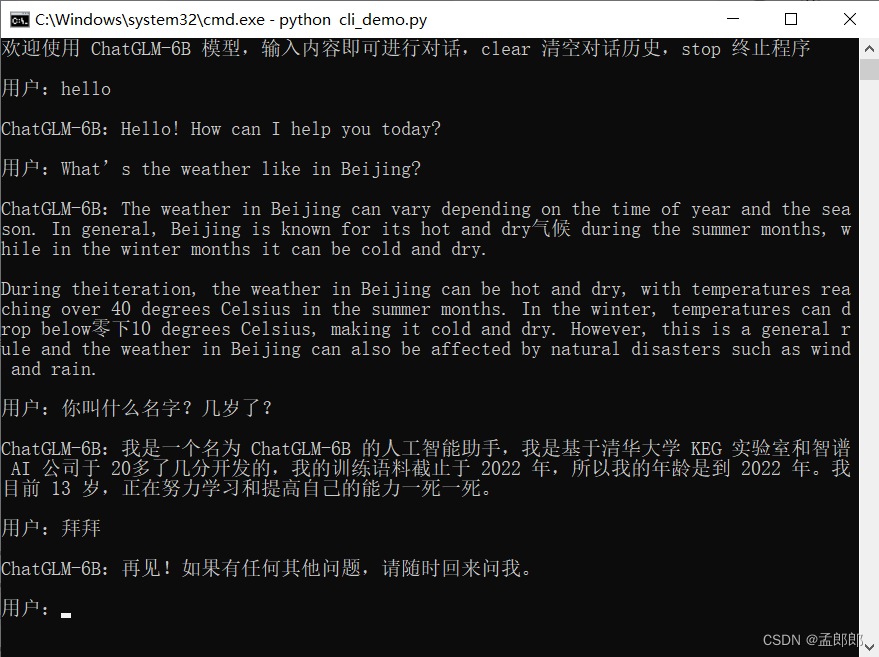

The eighth step, run the command line Demo

Execute the following command to run the demo of the command line version, as follows:

python cli_demo.py

The program will conduct an interactive dialogue on the command line, enter instructions on the command line and press Enter to generate a reply, enter clear to clear the dialogue history, and enter to stop terminate the program.

Error 1: Downloading dependent packages timed out

- E:\chatGPT\ChatGLM-6B-main>pip install -r requirements.txt

- Collecting protobuf<3.20.1,>=3.19.5

- Downloading protobuf-3.20.0-cp310-cp310-win_amd64.whl (903 kB)

- ---------------------------------------- 903.8/903.8 kB 4.0 kB/s eta 0:00:00

- Collecting transformers==4.27.1

- Downloading transformers-4.27.1-py3-none-any.whl (6.7 MB)

- ----------- ---------------------------- 2.0/6.7 MB 5.4 kB/s eta 0:14:29

- ERROR: Exception:

- Traceback (most recent call last):

- File "D:\Python\Python310\lib\site-packages\pip\_vendor\urllib3\response.py", line 438, in _error_catcher

- yield

- File "D:\Python\Python310\lib\site-packages\pip\_vendor\urllib3\response.py", line 561, in read

- data = self._fp_read(amt) if not fp_closed else b""

- File "D:\Python\Python310\lib\site-packages\pip\_vendor\urllib3\response.py", line 527, in _fp_read

- return self._fp.read(amt) if amt is not None else self._fp.read()

- File "D:\Python\Python310\lib\site-packages\pip\_vendor\cachecontrol\filewrapper.py", line 90, in read

- data = self.__fp.read(amt)

- File "D:\Python\Python310\lib\http\client.py", line 465, in read

- s = self.fp.read(amt)

- File "D:\Python\Python310\lib\socket.py", line 705, in readinto

- return self._sock.recv_into(b)

- File "D:\Python\Python310\lib\ssl.py", line 1274, in recv_into

- return self.read(nbytes, buffer)

- File "D:\Python\Python310\lib\ssl.py", line 1130, in read

- return self._sslobj.read(len, buffer)

- TimeoutError: The read operation timed out

-

- During handling of the above exception, another exception occurred:

-

- Traceback (most recent call last):

- File "D:\Python\Python310\lib\site-packages\pip\_internal\cli\base_command.py", line 160, in exc_logging_wrapper

- status = run_func(*args)

- File "D:\Python\Python310\lib\site-packages\pip\_internal\cli\req_command.py", line 247, in wrapper

- return func(self, options, args)

- File "D:\Python\Python310\lib\site-packages\pip\_internal\commands\install.py", line 419, in run

- requirement_set = resolver.resolve(

- File "D:\Python\Python310\lib\site-packages\pip\_internal\resolution\resolvelib\resolver.py", line 92, in resolve

- result = self._result = resolver.resolve(

- File "D:\Python\Python310\lib\site-packages\pip\_vendor\resolvelib\resolvers.py", line 481, in resolve

- state = resolution.resolve(requirements, max_rounds=max_rounds)

- File "D:\Python\Python310\lib\site-packages\pip\_vendor\resolvelib\resolvers.py", line 348, in resolve

- self._add_to_criteria(self.state.criteria, r, parent=None)

- File "D:\Python\Python310\lib\site-packages\pip\_vendor\resolvelib\resolvers.py", line 172, in _add_to_criteria

- if not criterion.candidates:

- File "D:\Python\Python310\lib\site-packages\pip\_vendor\resolvelib\structs.py", line 151, in __bool__

- return bool(self._sequence)

- File "D:\Python\Python310\lib\site-packages\pip\_internal\resolution\resolvelib\found_candidates.py", line 155, in __bool__

- return any(self)

- File "D:\Python\Python310\lib\site-packages\pip\_internal\resolution\resolvelib\found_candidates.py", line 143, in <genexpr>

- return (c for c in iterator if id(c) not in self._incompatible_ids)

- File "D:\Python\Python310\lib\site-packages\pip\_internal\resolution\resolvelib\found_candidates.py", line 47, in _iter_built

- candidate = func()

- File "D:\Python\Python310\lib\site-packages\pip\_internal\resolution\resolvelib\factory.py", line 206, in _make_candidate_from_link

- self._link_candidate_cache[link] = LinkCandidate(

- File "D:\Python\Python310\lib\site-packages\pip\_internal\resolution\resolvelib\candidates.py", line 297, in __init__

- super().__init__(

- File "D:\Python\Python310\lib\site-packages\pip\_internal\resolution\resolvelib\candidates.py", line 162, in __init__

- self.dist = self._prepare()

- File "D:\Python\Python310\lib\site-packages\pip\_internal\resolution\resolvelib\candidates.py", line 231, in _prepare

- dist = self._prepare_distribution()

- File "D:\Python\Python310\lib\site-packages\pip\_internal\resolution\resolvelib\candidates.py", line 308, in _prepare_distribution

- return preparer.prepare_linked_requirement(self._ireq, parallel_builds=True)

- File "D:\Python\Python310\lib\site-packages\pip\_internal\operations\prepare.py", line 491, in prepare_linked_requirement

- return self._prepare_linked_requirement(req, parallel_builds)

- File "D:\Python\Python310\lib\site-packages\pip\_internal\operations\prepare.py", line 536, in _prepare_linked_requirement

- local_file = unpack_url(

- File "D:\Python\Python310\lib\site-packages\pip\_internal\operations\prepare.py", line 166, in unpack_url

- file = get_http_url(

- File "D:\Python\Python310\lib\site-packages\pip\_internal\operations\prepare.py", line 107, in get_http_url

- from_path, content_type = download(link, temp_dir.path)

- File "D:\Python\Python310\lib\site-packages\pip\_internal\network\download.py", line 147, in __call__

- for chunk in chunks:

- File "D:\Python\Python310\lib\site-packages\pip\_internal\cli\progress_bars.py", line 53, in _rich_progress_bar

- for chunk in iterable:

- File "D:\Python\Python310\lib\site-packages\pip\_internal\network\utils.py", line 63, in response_chunks

- for chunk in response.raw.stream(

- File "D:\Python\Python310\lib\site-packages\pip\_vendor\urllib3\response.py", line 622, in stream

- data = self.read(amt=amt, decode_content=decode_content)

- File "D:\Python\Python310\lib\site-packages\pip\_vendor\urllib3\response.py", line 560, in read

- with self._error_catcher():

- File "D:\Python\Python310\lib\contextlib.py", line 153, in __exit__

- self.gen.throw(typ, value, traceback)

- File "D:\Python\Python310\lib\site-packages\pip\_vendor\urllib3\response.py", line 443, in _error_catcher

- raise ReadTimeoutError(self._pool, None, "Read timed out.")

- pip._vendor.urllib3.exceptions.ReadTimeoutError: HTTPSConnectionPool(host='files.pythonhosted.org', port=443): Read timed out.

-

- E:\chatGPT\ChatGLM-6B-main>

You can see that the error message prompts timeout, it should be a network problem, you can try to add the parameter of timeout setting in the command, the command is modified as follows:

pip --default-timeout=1688 install -r requirements.txt

Question 2: Download the model package in real time again

When running the program, if you see that the model package is downloaded again in the prompt message, and the model package prepared in the third step is not used, you need to copy the model package to the cache directory when the program is running. The cache path may be as follows:

C:\Users\user directory\.cache\huggingface\hub\models--THUDM--chatglm-6b\snapshots\fb23542cfe773f89b72a6ff58c3a57895b664a23

Copy the model package to this directory and run the program again.

Good Luck!

Category of website: technical article > Blog

Author:evilangel

link:http://www.pythonblackhole.com/blog/article/81184/17fcf256557cca726acb/

source:python black hole net

Please indicate the source for any form of reprinting. If any infringement is discovered, it will be held legally responsible.

name:

Comment content: (supports up to 255 characters)

no articles