The solution to the loss and nan values during YOLO series training or the P\R\map are all 0 values during the test (GTX16xx series graphics card big pit)

posted on 2023-05-21 16:20 read(1168) comment(0) like(9) collect(3)

Table of contents

0 Preface (not very useful, you can directly see the solution)

☆ This problem is a big pit for GTX16xx users. Basically every GTX16xx user will encounter these problems when using YOLO series algorithms.

This method is an incomplete solution , sacrificing training time in exchange for solving the problem. After I tested the YOLOV5 and YOLOV7 algorithms on the GTX1660TI notebook, they were successfully solved.

0 Preface (not very useful, you can directly see the solution)

Recently, I am working on algorithms related to target detection . Because the real-time performance is very high, I chose the YOLO series of algorithms. The first thing I chose was the freshly released YOLOV7 algorithm. There was no problem during training, but in the final test, I found that no bbox could be detected. At first I thought it was not trained well, but found that the validation during training had bbox. In the end, I decided to adopt the YOLO V5 algorithm honestly (why not choose Meituan’s YOLO V6? The data set of YOLO V6 outside the paper, the performance is not as good as YOLOV 5, everyone dddd), but when using YOLOV5, I found that the nan value appeared during training , so I found a solution in the yolov5 question and answer on github (without using AMP). However, when the verification is found, P\R\map is all 0 values. So I searched and searched, but in the end I didn't find the problem. Finally, after reading the source code of train.py of YOLO V5, I found some solutions.

1 The cause of the problem

Due to some official NVIDIA software problems, some CUDA codes in PyTorch have some problems, that is, the fp16 (float16) data type will have nan values in some operations such as convolution. As a result, nan values appeared during training, so the above situation will not be detected during validation.

2 solutions

YOLO V5

There are no nan values in the detection and no recognition problems, only problems during training.

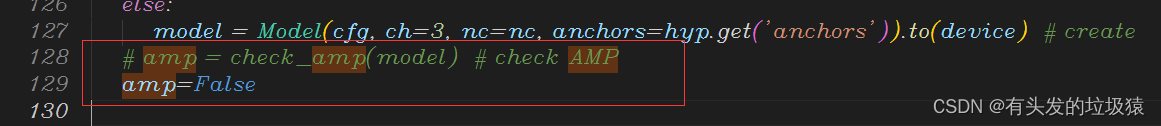

Let’s officially start to solve the problem. Search for amp in train.py, comment out check _amp and directly assign amp to False, as shown in the figure below:

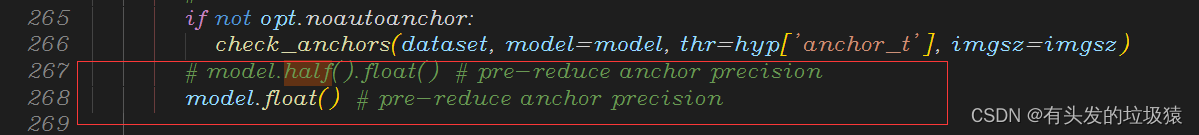

After doing this, there will be no nan values when running train.py to find the training. If there is still, then this blog should be closed and other methods should be considered. Then, you will find that P/R/map are all 0 during validation. Then you continue to search for the half keyword in train.py, and change all .half() to .float(), as shown below:

After doing this, you will find

— still didn't solve the problem.

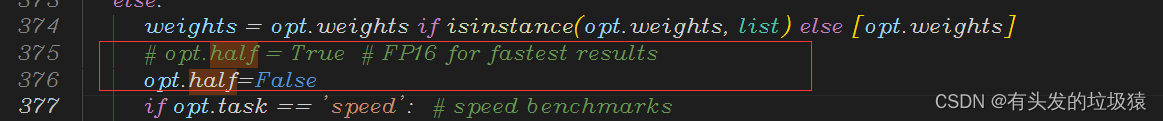

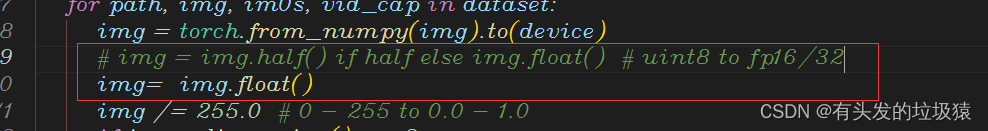

To solve this problem, you also need to change all half to False in val.py, and change im.half() if half else im.float() to im.float() . As shown below:

After doing this, running train.py again found no problems.

YOLO V7

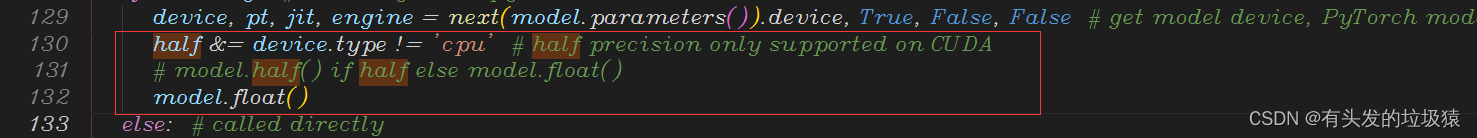

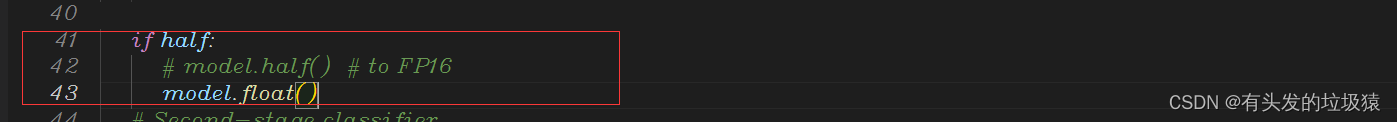

After testing, there is no problem with train.py, mainly because there is a problem in detect.py. The main thing is to change each place .half() to .float() or assign half to False , as shown below:

3 Summary

In fact, the bottom line is that NVIDIA has a problem with the CUDA package related to GTX16xx. Some people say that the PyTorch version is downgraded to 1.10.1 and CUDA 10.2. PyTorch officially does not support the use of CUDA 10.2 version.

The principle of this solution is to change the half-precision floating-point data of the graphics card to single-precision floating-point data for calculation. Although the accuracy is higher in this way, the training time will increase accordingly, and the video memory usage will also increase. However, this is better than not being able to train and not being able to detect. If there is a better way, please share it in the comment area. .

contact_details_qq=277746470Category of website: technical article > Blog

Author:cindy

link:http://www.pythonblackhole.com/blog/article/25254/2b695ac61c05bc5ce5ec/

source:python black hole net

Please indicate the source for any form of reprinting. If any infringement is discovered, it will be held legally responsible.

name:

Comment content: (supports up to 255 characters)

no articles